Chat entertainment with AI has revolutionized how people interact with machines. Tools such as Chatgpt have shown how powerful texts can be – they are quick, accurate and accessible for all. But the language is much higher than just words on a screen. Our voices carry emotions, nuances and tonality that are often lost in a text area. From a friendly smile in the tone to an urgency in a vigorous phrase: a pure text is not indicated that the voice and language communication is indicated.

Advertisement

Openai realtime api (beta) Open new opportunities to go beyond classic chatbots. This enables the reality of the text’s streaming and speech recognition, language synthesis and integration of dynamic conversations. It enables developers to create AI experience which is not only a wise, but also human influence.

Marias Obert like to make prototypes with the latest cloud technologies and like to talk about it. His career started in UI development in Sunny California. During this time he learned to love web technologies such as JavaScript and especially the entire node.JS ecosystem.

This reveals new areas of application. The use of phone calls is clear. This technique has a lot of capacity, especially in call centers and customer communication. The AI system can not only answer regular questions here, but can also analyze complex concerns, generates appropriate answers and optimize interactions through natural speaking. And in the event that AI is not white due to lack of training data, internal data sources can be added.

The following shows how to link the beta version of Openai Realtime API with a private API for pricing and make it accessible to all by phone calls. The article publishes the technical bases of this integration, challenges and benefits in behavior and how this technique can change the future of customer communication.

What is behind Openai Realtime API?

In early 2024, developers had to combine Text-to-Spich (TTS), Speech-to-Text (STT) and large language models (LLM) to create a language AI application. It brought with him with many technical challenges, especially in the context of delay and waiting time. A small obstruction of a few seconds between the question and the answer may quickly seem unnatural to a conversation.

According to Apple, how to protect privacy

According to Apple, how to protect privacyIn May 2024, Openai surprised the developer community with one Impressive demo GPT-4o Realtime Translation. The system showed how language input is processed, translated and output in real time – without a noticeable delay. Suddenly it was clear: a strong real -time solution for the language and lesson was within the access and the expectations in the community increased. After that, however, it calmed down for a few months. On 1 October 2024, Opeenaai launched the long -awaited beta version of Realtime API. With this API, vision, language, lesson and AI can be associated with reality, really. Realtime provides integrated work for API:

- Speech recognition (STT): Direct transcription of the text spoken with high accuracy.

- Real-time text processing: Use of powerful GPT-4 models for the processing of text and generation.

- Language Synthesis (TTS): Conversion of answers in human -related language.

The API is designed to especially reduce delay and enable a fluid talk experience – a decisive progress for the use of AI in applications such as telephone services, virtual assistants or real -time translations. The API currently costs between $ 10 and $ 100 per $ 1 million audiotok depending on the currently built -in model and its version. According to Openaai, it matches about 0.006 and $ 0.06 per spoken minute. In addition, depending on the provider, there are approximately $ 0.01 per minute for communication by the phone.

Working with Openai Realtime API

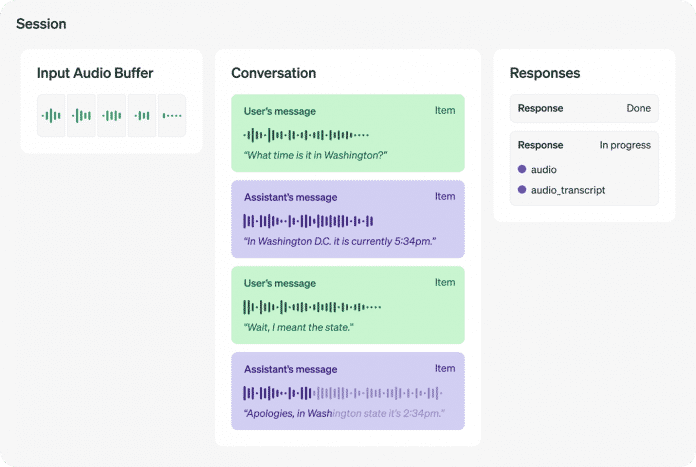

To take advantage of the full capacity of API, it helps, their architecture and headquarters Mechanism To understand (see Fig. 1):

A conversation (session) consists of several “conversion items” that can come from both participants in conversation (Fig. 1).

(Picture: Openi,

The API turned every conversation into such conversation items. These represent units in dialogues, such as users input, AI reaction or intermediate results. Developers can work on objects in a target manner, for example, to change the course of interaction, to remove irrelevant parts or re -inform the answers. This makes it quite easy to control complex dialogues. API reference All conversations include a complete list with items. People relevant for this article have been discussed below.

For natural language interaction, Exercise Necessary. The API uses a voice activity detection (VAD) to identify the language breakdown and the end of sentences. As a result, the AI can firmly determine when the opposite is spoken and should start with its answer. This ensures liquid communication without users to give clear input boards. Developers can optimize VAD parameters to adapt to behavior to specific landscapes such as long north or rapid reactions. If the parameter is closed, AI wait with the answer until it is requested by the conversation item.

Sometimes it is also necessary to disrupt the ongoing conversation, for example if the user wants to correct a statement. For this, API provides items Cut offThe current conversation with the developer can break the flow and replace it with a new input. This makes it possible to react flexible to user requests without losing the context of the entire session.

Another major mechanism is Calling ceremonyThis enables AI to reach predetermined works during a conversation. This is particularly useful if the application should reach additional data from external sources, calculate or control the control system. Developers should define these functions in their application and ensure that the API can access them correctly to ensure easy interaction.

The API also includes an integrated moderation system that can automatically identify undesirable material and thus enables safe and controlled applications. If an answer is blocked, then developers can use the help of the developers Response.cancel Cancel the output immediately and thus continue the dialogue in a controlled manner.

Finally plays parameter temperature An important role in adopting AI’s behavior. With the chat variant Chatgpt, this attitude affects how creative or intention is the model’s answers. A minimum value (currently at 0.6) is suitable for structured and accurate answers, while high values are more creative, but potentially unexpected reactions. Developers must carefully adjust the temperature to customize the model for the relevant requirements of their application.

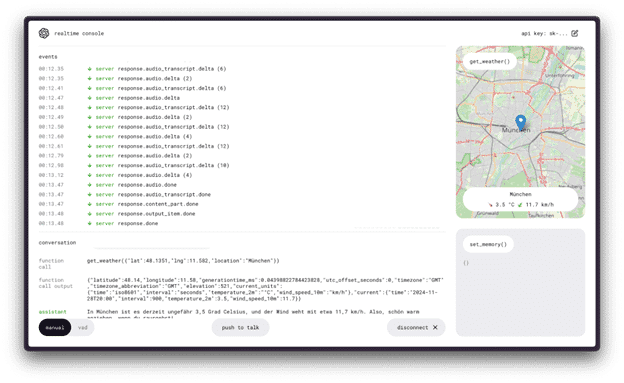

To know API better, it is appropriate Openai Realtime Console To cloning and perform locally from Github (see Figure 2). This tool enables API functioning to test in an interactive environment, to observe various events in real time and better understand the mechanism behind the API. Otherwise, the console comes with two prefabricated devices, which were developed especially to try function calling and facilitate their integration in their own applications. This practical testing environment is an ideal entry to efficiently use API.

Realtime console is ideal for testing the API (Fig. 2) locally.

(Image: Screenshot (Marias Obert))

A new era of chess and AI which is the reason

A new era of chess and AI which is the reason